The first time Dr. Selin Aras saw the demo, she didn’t smile.

She didn’t frown either.

She simply watched the screen with the stillness of someone who had spent her career learning the difference between a good idea and a good idea that would hurt people.

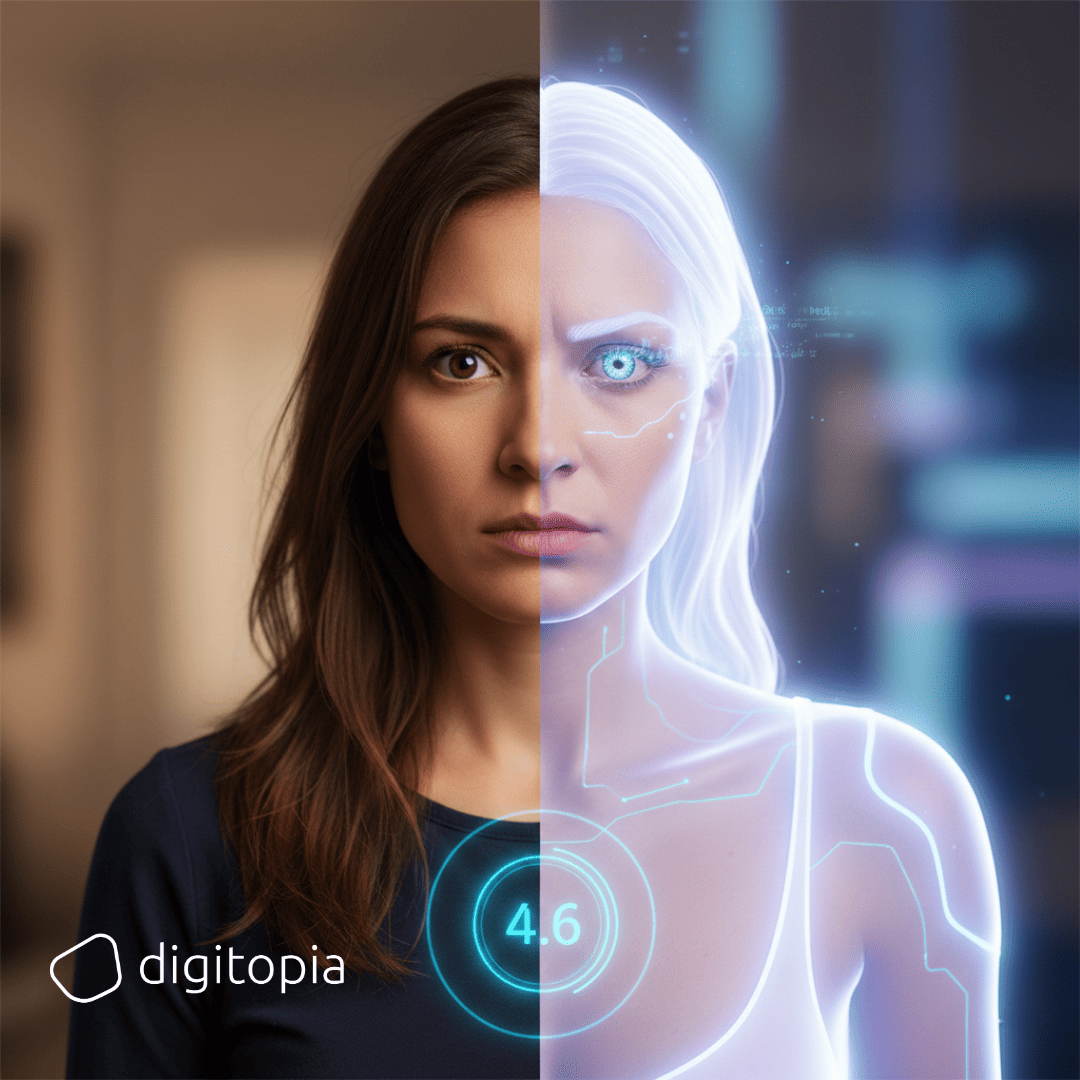

The app looked deceptively friendly: clean typography, soft colors, and a single number floating at the top like a promise.

HealthScore: 4.6

Below it, a progress ring pulsed gently, as if the phone itself were breathing.

A few tiles offered suggestions:

“10-minute walk: +12 points”

“Hydration check-in: +4 points”

“Sleep goal reached: +18 points”

“Annual screening scheduled: +150 points”

At the bottom, a banner:

Unlock a 9% premium discount when you reach 4.8.

The product manager spoke with the optimism of someone who believed humans were essentially rational.

“We’re making it simple,” he said. “Healthy behaviors become points. Points become savings. Savings become loyalty. Everyone wins.”

Selin looked up at the executive team, and then at the woman beside her—CEO Dr. Nermin Kaya, founder of Helia Health, former clinician, now a leader with the calm intensity of someone who had seen both life and spreadsheets up close.

Nermin nodded toward Selin as if saying: Your turn.

Selin didn’t speak about UI or conversion funnels. She spoke about gravity.

“This can work,” she said. “Or it can become a social-credit system with a stethoscope.”

The room went quiet. Not hostile. Alert.

Nermin’s eyes stayed steady. “That is exactly why I hired you,” she said.

Helia Health wasn’t a typical healthcare company. It offered private insurance and provider networks, but it also ran wellness programs, chronic care management, and a growing telemedicine platform. It competed in a market where customer expectations were rising faster than trust.

Customers wanted convenience and personalization.

Regulators demanded privacy and fairness.

Doctors wanted less bureaucracy.

Finance wanted margins.

In that collision of wants, Helia had made a strategic bet: if it could help members stay healthier, everyone benefited. Lower claims, higher satisfaction, better outcomes.

The board had approved the plan under a name that sounded warm and inevitable:

Project Luma: Health, Rewarded.

And they had recruited Selin as Chief Digital Officer to deliver it.

Her mandate was simple in the board deck and complicated in real life:

- Build the app.

- Put it in customers’ hands.

- Make it valuable—for them and for the company.

Selin accepted for one reason: she believed the future of healthcare required better relationships with patients, not just better billing.

But she also knew the truth most digital leaders learn the hard way:

When you gamify a human system, you don’t just change behavior.

You change identity.

A Company of Experts and Doubters

Selin’s first month at Helia felt like entering a cathedral full of competing gods.

Clinical teams worshipped outcomes.

Actuaries worshipped risk models.

IT worshipped stability.

Marketing worshipped growth.

Compliance worshipped the rulebook.

And every function believed it was the one preventing catastrophe.

The naysayers were polite at first.

In steering committees, they used familiar phrases:

- “This is not our core.”

- “We don’t have the data maturity.”

- “Customers won’t engage.”

- “This will trigger privacy backlash.”

- “This is just a shiny app.”

- “We are not a fitness company.”

The sharpest critic was Efe Gün, Helia’s longtime Head of Claims Operations. He ran a complex system of approvals and adjudication like a railway dispatcher. Efficiency was his pride. Predictability was his religion.

“This app is going to create noise,” he said in one meeting. “Thousands of micro-interactions. Complaints. Disputes. And then people will demand discounts for… walking.”

Selin didn’t argue. She asked, “If people walked more and needed us less, would that be noise?”

Efe’s expression didn’t change. “They’ll game it.”

Selin nodded. “Yes. Some will. Which is why we design for integrity.”

The CEO, Nermin, supported Selin publicly, but demanded rigor privately.

“You’ll get one chance,” she told Selin. “Not because the idea is fragile, but because trust is.”

Selin agreed. “Then we will build trust into the architecture.”

She formed a cross-functional “Luma Core” team: product, data science, clinical, behavioral science, cybersecurity, privacy counsel, customer experience, and—importantly—claims.

Efe didn’t like joining. Selin insisted anyway.

“Transformation doesn’t succeed by avoiding critics,” she said. “It succeeds by turning their fears into design requirements.”

The Design: Points Without Punishment

Selin refused to build a system that punished sickness.

That was her first non-negotiable. It would violate both ethics and strategy.

Luma would reward positive behaviors, but it would not penalize health conditions—especially those influenced by genetics, socioeconomic realities, or trauma.

The scoring model was built around three principles:

1) Consent and Control

Members could opt in. They could see what was tracked. They could disable categories. They could delete their data. Helia didn’t own their bodies.

2) Equity by Design

The scoring used personal baselines rather than universal standards. A 10-minute walk for one person could be heroic; for another, trivial. Luma celebrated improvement, not perfection.

3) Medical Humility

The app would never imply that high scores meant moral superiority. Health was not virtue. Health was a complex state.

Selin also introduced what she called the “Quiet Clause.”

No public leaderboards. No “compare yourself to your neighbors.” No social shaming.

The app would be a companion, not a judge.

Marketing pushed back.

“Competition drives engagement,” Ines, head of Growth, argued. “People want to share achievements.”

Selin answered carefully. “Sharing is fine. Ranking is dangerous. Nosedive taught us the cost of turning life into ratings.”

Nermin backed her. “We’re not building a popularity economy,” the CEO said. “We’re building a health economy.”

So they found the middle path:

- Members could share milestones if they chose.

- They could form small private circles (family, friends).

- They could join “care cohorts” for chronic conditions, moderated by clinicians.

- But the HealthScore remained private by default.

They embedded value into the program in ways that felt tangible:

- premium discounts tied to sustained engagement, not short bursts

- rewards for preventive care and adherence

- tailored coaching for high-risk groups

- faster access to certain services for members in active care plans

- personalized care navigation that reduced friction

On the company side, Luma created a new asset: a living stream of voluntary, consent-based health signals and interactions. Not to exploit people, but to understand them—segment needs, anticipate care gaps, reduce avoidable events.

Selin made one more move that impressed even the skeptics:

She created an independent ethics review group, including external advisors, to review scoring changes and campaign designs.

Compliance relaxed slightly.

Claims looked… wary, but less hostile.

The CEO smiled for the first time in months.

“Let’s launch,” Nermin said.

The Rise: When People Choose to Play

The first three months felt like a miracle.

App downloads exceeded projections. The onboarding flow—designed like a gentle conversation rather than a legal trap—had high completion rates. Members liked the idea of making small improvements and seeing them reflected in points.

More importantly, they liked the benefits:

- discounts that felt earned

- coaching that felt personal

- reminders that felt helpful, not nagging

- appointments that were easier to schedule

- telemedicine integrated seamlessly

The early wins were operational too.

Claims volume didn’t spike; it shifted. There were fewer avoidable ER visits among engaged members. Preventive screenings increased. Chronic care adherence improved in key cohorts.

Efe, the claims head, brought Selin a report with a reluctant tone.

“I don’t like this,” he said, sliding the paper over.

Selin read the first line: Claims cost trend improved in Luma-active population.

She looked up. “You don’t like good news?”

Efe sighed. “I don’t like being wrong.”

Selin smiled politely. “Then we’ll make it easier for you: keep trying to prove it won’t work.”

Efe almost laughed. Almost.

Nermin used the momentum to negotiate partnerships: pharmacies, gyms, diagnostics providers, mental health platforms. Each integration added convenience and, crucially, made the app feel like a doorway to care rather than an advertisement.

Luma became a habit.

And habits, Selin knew, were where systems gained power.

That was when the twist arrived.

Not from technology. Not from regulators.

From a group of people who refused to be optimized.

The Protest: The Right to Get Sick

They called themselves The Unscored.

At first, they were a small online community, posting essays and videos about what they called “wellness coercion.” They criticized the cultural obsession with performance, productivity, and constant self-improvement.

Their manifesto was strangely poetic:

“We are not projects.

We are not dashboards.

We are allowed to be tired.

We are allowed to be sick.

We are allowed to refuse the metrics.”

Then they started showing up outside Helia’s headquarters, quietly, with signs that looked like art installations.

MY BODY IS NOT A KPI

DON’T GAMIFY MY BLOOD

I CLAIM THE RIGHT TO BE IMPERFECT

SICKNESS IS NOT FAILURE

A journalist described them as “hippies with data literacy.”

Another compared them to the philosophical resistance in Julie Zeh’s The Method—a society where health becomes a civic duty, and dissent becomes illness.

The story went viral.

And then, inevitably, it became political.

A talk show host mocked the group. A senator defended them. Social media polarized: one side calling them irresponsible, the other calling Helia dystopian.

Selin watched the headlines accumulate with a familiar sensation: the feeling of standing in front of a system you built and realizing the world will interpret it in ways you didn’t design for.

Nermin called Selin into her office. She had her clinician’s calm and her CEO’s urgency.

“This is a trust event,” Nermin said. “Not a PR event.”

Selin nodded. “Agreed.”

“We need to respond,” Nermin continued. “But how we respond matters.”

Selin took a breath. “We don’t fight them. We listen. And then we evolve the program so that it becomes what it should have been from the start: voluntary, supportive, and non-judgmental. More choice, more transparency, less implied morality.”

Nermin leaned forward. “Do we pause the program?”

Selin shook her head. “If we pause, we let others define the narrative. We should improve it in public.”

Outside, the Unscored held a silent demonstration. They wore small badges with no star rating—just a blank circle.

A visual refusal.

And then something happened that threatened to turn protest into scandal.

A whistleblower—anonymous—leaked an internal slide deck to a news outlet.

The headline was brutal:

“INSURER USES HEALTH APP TO PROFILE CUSTOMERS.”

The deck wasn’t entirely wrong. It showed segmentation models, propensity scores, and retention strategies.

It also omitted the consent design and ethical constraints.

The story wasn’t about what Helia was doing.

It was about what people feared Helia could do.

Fear spreads faster than facts.

Within days, app store reviews dropped. Some members churned. Regulators requested clarifications. The board demanded a crisis update.

Efe resurfaced with a single sentence in a steering meeting:

“I told you they’d game it. And now the narrative is gaming us.”

Selin didn’t snap back. She simply said, “Then we need to prove what the system is—and what it is not.”

The Response: Designing Dignity Into the Product

Selin and Nermin made a decision that shocked marketing and irritated finance.

They introduced a new mode in the app:

UNSCORED MODE

In Unscored Mode:

- the app continued to offer guidance and access, but it stopped displaying a single composite HealthScore

- rewards shifted from “score thresholds” to “participation milestones”

- members could choose to engage in preventive care and coaching without feeling ranked

- discounts were framed as “wellness participation savings,” not “good behavior bonuses”

- data collected for analytics was minimized and clearly explained

- the language changed: from “achieve” to “support,” from “unlock” to “access,” from “optimize” to “care”

Selin also launched The Luma Charter, a public pledge:

- No penalties for sickness.

- No pricing discrimination based on app data.

- No selling health behavior data to third parties.

- No public ranking systems.

- Full transparency and control for members.

- External ethics oversight published annually.

The board worried about precedent. The CFO worried about margin.

Nermin’s answer was clear:

“Trust is our margin.”

To address the leaked deck, Selin did something rare in corporate crises: she published a plain-language explainer of Helia’s segmentation and profiling approach—what it did, what it didn’t do, and why.

She framed segmentation not as manipulation, but as service:

- identify members who need support

- tailor care navigation

- reduce friction

- avoid unnecessary interventions

- improve outcomes, not extract value

Then she invited the Unscored leadership to a moderated discussion with Helia’s ethics panel.

Most people expected conflict.

Instead, something more interesting happened: the Unscored asked rigorous questions. Selin answered with rigor. They found common ground: people wanted agency.

Not everyone joined the app again. But the narrative shifted.

Helia wasn’t forcing wellness.

It was offering it, with choice.

The app store ratings recovered. Engagement stabilized.

And then, unexpectedly, Luma surged again—because customers began sharing a different kind of story:

“This is the first health app that doesn’t make me feel guilty.”

Guilt, Selin realized, was the enemy of sustainability.

The Second Rise: A Health Program That People Trust

With the crisis handled, Selin returned to execution.

She expanded the back-end capabilities, using the app’s consent-based signals to improve real operations:

- better cohort-based care pathways

- earlier interventions for chronic patients who requested support

- predictive staffing for telemedicine

- integrated medication adherence support

- improved claims triage with fewer unnecessary delays

- redesigned customer journeys for high-friction processes

The company began to learn.

Not in a dystopian way.

In a practical way.

Luma also became a partner ecosystem anchor. Providers liked it because it reduced no-shows and improved adherence. Customers liked it because it simplified navigation. Helia liked it because it reduced avoidable costs and improved retention.

Even Efe, the former skeptic, became a guardian of the program—because claims operations improved with fewer preventable spikes.

In a quarterly review, he said something that made Selin blink:

“I still think people will try to game the points,” he said. “But… you built a system that makes gaming less attractive than genuine engagement.”

Selin nodded. “That’s what happens when value is real.”

The CEO’s strategy shifted from “program” to “platform.”

Helia launched Luma internationally. Competitors rushed to copy it, but without Helia’s consent architecture and dignity guardrails, their versions triggered backlash.

Helia gained market share.

The company became known not merely as a payer, but as a care partner.

And the Unscored?

They didn’t disappear. They evolved too.

They became a civic voice reminding society that health cannot become a moral ranking. Helia occasionally collaborated with them on public education about autonomy and ethics.

Sometimes the best opposition is the kind that keeps you honest.

The Ending: Succession Without Drama

Five years after Selin joined Helia, Nermin Kaya stood on a stage in front of employees and partners.

Her hair was a bit grayer. Her voice was as steady as ever.

“I’m retiring,” she said. “It’s time.”

The room held its breath. Founders rarely leave without turbulence.

But Nermin had planned this like a clinician plans a careful handoff.

“There’s only one person,” she continued, “who understands what Helia has become and what it must protect—our mission, our trust, and our future.”

She turned to Selin.

“Selin Aras will be the next CEO of Helia Health.”

Applause rose like weather.

Later, in a quieter moment, Selin and Nermin walked through the Helia campus, past screens showing Luma engagement metrics—now presented differently: not as star ratings, but as care journeys completed, preventive milestones achieved, and satisfaction reported.

“You were right,” Nermin said. “Gamification is risky.”

Selin replied softly. “It’s not the game that’s risky. It’s what the game implies about human worth.”

Nermin stopped walking and looked at Selin.

“What do you think made it work?”

Selin didn’t say “AI” or “data” or “platform.”

She said, “We gave people a way to get healthier without telling them they were bad for being human.”

Nermin smiled. “Keep it that way.”

Selin watched her mentor leave with a feeling that wasn’t triumph, exactly.

It was responsibility.

Because now she would lead a company whose product wasn’t just insurance or apps.

It was a relationship with people’s bodies and fears and hopes.

As CEO, Selin issued her first internal memo with a title that sounded like a warning and a promise:

WE WILL NEVER RATE HUMANITY.

And then she added a second line:

WE WILL EARN TRUST, ONE CHOICE AT A TIME.

Helia continued to grow.

Customers continued to use Luma.

Not because they wanted to chase an artificial perfection.

But because the program had evolved into something more mature:

A system that made health easier—without turning health into a social status.

In a world that loved ratings, that was the rarest kind of success.

What this story tells us (without breaking the spell)

- Gamification changes identity, not just behavior. Design for dignity, not addiction.

- Voluntary participation and transparency are strategic, not merely ethical. Trust is a competitive moat.

- Health scoring must avoid moral implication. Reward participation and improvement, not perfection or comparative ranking.

- Data value must be mutual. Customers will share signals when they receive tangible, respectful benefits.

- Segmentation and profiling can be service—if governed. Clear guardrails prevent the slide into coercion.

- The loudest critics can be your best design partners. They reveal where your product can be misinterpreted—and misused.